I promised to explain it in detail. I think I should do it before Uncle Sam decide to default next Tuesday.

First, let me refresh your memory about what I said in last blog. We have two different probability measure for bond DP (default probability): real world vs. risk neutral. Due to lack of real default data, we can not bootstrap US Treasury DP from the past. Therefore we turn to risk neutral measure. Under risk neutral, DP is forward looking. It contains market sentiment and sometimes over-estimate or under estimate real world probability. There is no right or wrong about risk neutral DP because we are measuring the same thing under two different rulers.

Before I jump into math formula, I’d like to explain the principle of financial derivative pricing. The derivative price is determined by supply and demand in the market. But who drives supply and demand? If market is complete and people are smart and rational as we are, the formation of price should follow rational pricing. For example, you are selling an iced tea. At what price do you sell is deemed to be appropriate? A reasonable method is to find the cost to replicate this iced tea: you decompose the ingredients, check the cost of tea, ice and lemon and plus your labor. Suppose you thought you deserve more for your hard work, thus raise the final price. Chances are that when the price reach high enough, another people will take over your business and in the end lower the price to a reasonable level. This philosophy also applies to option and other derivatives pricing. But only difference is that replicating derivatives is more sophisticated than decomposing ingredients of your iced tea.

Rational pricing principle leads us to believe that the market price of Treasury bond does provide market view towards DP. In order to have some idea about terminology I mention later, please read the attached picture from my personal notes about two important concept: hazard rate and survival probability.

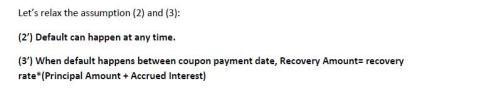

Bootstrapping DP from price is a reverse-engineering of pricing bond. To make it clear to you, I will start with a simple example on pricing bond given survival probability.

Suppose, you have bond face value $100 with maturity 3 years, annual coupon rate 5%, 50% recovery rate and survival probability at year one 90%, year two 80% and year three 70%. The derivation of bond price is to decompose the price into two parts:

A. Given no default happen in 3 years

B. Given default happen in 3 years.

Sum up two conditional expectation is equal arbitrage-free bond price.

To bootstrap DP, we do it in an reverse direction: We have risky bond price from market, risk free rate from Fed and we can compute DP at each time point.

The difficulty for bootstrapping Treasury DP is that Treasury bond iteself is deemed to be risk free rate. But since now it may default. We can shift the time line before Fed change interest rate and use Treasury bond price at that time as risk free rate.

Here is full bond price derivation:

I should have used LaTex to type in formula. But I’m too lazy to retype it again. So I copy & paste from word.

Above it is first method: use bond price to bootstrap DP. Next time I will explain bootstrapping from CDS spread.

This Saturday I spent my afternoon to finish mp2 assignment. This assignment is to design and implement matrix parallel multiplication algorithm by using tiling technique. For whom are interested in the technical detail, you can visit iTune U and search “Programming Massively Parallel Processors with CUDA” in Stanford University or go to UIUC website and search ece498.

Anyway, the whole studying process is very fruitful: I learned how to use ddd and cuda-gdb to debug CUDA multi-thread environment. One bizarre thing I need to mention here is that users can *not* use cuda-gdb if GPU is running XWindow. I guess unlike CPU, GPU doesn’t provide stack for its reentrant mechanism . Once calculation task is launched in GPU, you can’t re-scheduling the task and save register to stack as CPU does. In the middle of coding, I got so frustrated in fixing Makefile that I decided to went back to NCSU library and borrowed two books on GNU Make.

The outcome of the process is fascinating. The program compute N-by-N matrix multiplication. I compare the speed and result between CPU and GPU. When size is as small as 16, GPU is slower than CPU. This is because the overhead to load data from host memory to GPU memory. However, when size increases, GPU takes absolute advantage especially in 4096×4096 case: GPU 137 times faster than CPU.

I haven’t had time to investigated why GPU results are different from CPU in large matrix. My initial guess is that Fermi GPU use FMA thus it may be more accurate than my Intel i7 2600K CPU in large scale matrix. Because precision and accuracy accumulates in this case.

I read a blog on explaining GPU floating point computation from NVIDIA a couple weeks ago. The paper is an interesting reading and I’d recommend you to read if you have time. It did help me understand something I may overlook in the past. By the way, the author is a very nice guy even when I rudely pointed him out the typo and issue in the paper, he still reply to me politely.

Please contact me if you are interested in playing around the code.

———————————————————————————–

[Ricky@gtx NVIDIA_GPU_Computing_SDK]$ C/bin/linux/debug/matrixmul 16 2>error.txt Running GPU matrix multiplication... GPU computation complete GPU computation time: 0.000392 sec CPU computation complete CPU computation time: 0.000037 sec Test PASSED [Ricky@gtx NVIDIA_GPU_Computing_SDK]$ C/bin/linux/debug/matrixmul 64 2>error.txt Running GPU matrix multiplication... GPU computation complete GPU computation time: 0.000357 sec CPU computation complete CPU computation time: 0.001087 sec Test PASSED [Ricky@gtx NVIDIA_GPU_Computing_SDK]$ C/bin/linux/debug/matrixmul 256 2>error.txt Running GPU matrix multiplication... GPU computation complete GPU computation time: 0.003685 sec CPU computation complete CPU computation time: 0.082953 sec Test PASSED [Ricky@gtx NVIDIA_GPU_Computing_SDK]$ C/bin/linux/debug/matrixmul 1024 2>error.txt Running GPU matrix multiplication... GPU computation complete GPU computation time: 0.176093 sec CPU computation complete CPU computation time: 17.468283 sec Test FAILED [Ricky@gtx NVIDIA_GPU_Computing_SDK]$ C/bin/linux/debug/matrixmul 4096 2>error.txt Running GPU matrix multiplication... GPU computation complete GPU computation time: 10.921380 sec CPU computation complete CPU computation time: 1372.149684 sec Test FAILED

By Sunday night, I found a bug in my program. It is a well-hidden race condition. The issue won’t surface unless the size of matrix is large enough. In addition, I also found that if I run my program in XWindows, the result is not stable. Therefore I tested it in command line mode (ie, run command ‘init 3’)

[Ricky@gtx NVIDIA_GPU_Computing_SDK]$ C/bin/linux/debug/matrixmul 4096 2>error.txt

Running GPU matrix multiplication...

GPU computation complete

GPU computation time: 11.259042 sec

CPU computation complete

CPU computation time: 1524.171190 sec

Test PASSED

US is on the brink of a default that could deepen recession. The reason is way beyond stupid: because of dog fighting among politicians, US gov is not unable to but reluctant to pay its bill.

I’m not interested in politics but more into CBS news comparing the default probability between US and Greece. It said that US DP (default probability) is at 1 % while Greece 68%.

So how do these number come from?

Unlike survival probability in actuarial science domain where we have a large pool of people to study their statistics, US bond never default before (I hope it won’t in future). Therefore there is no data to compute its physical measure DP for sovereign bond.

DP quoted by CBS is under so-called risk neutral measure, which bootstraps from financial instrument price and contains information about market’s opinions. It is a nice measure since it is forward-looking while statistics under physical measure is based on the data in the past. As we all know that history won’t repeat itself. Credibility of physical measure is always under question. However, the price fluctuate frequently in volatility market. The DP under risk-neural is more likely to be more adverse in crisis and too good to be truth during market booming.

As far as I learn, there are at least two financial instrument candidates that can bootstrap DP.

1. Bond Price.

For whom are interested in, here is reference

See [Journal of Derivatives, Vol. 8, No. 1, (Fall 2000), pp. 29-40 ] Valuing Credit Default Swaps I: No Counterparty Default Risk, John Hull and Alan White. See [JP Morgan] Introducing the J.P. Morgan Implied Default Probability Model: A Powerful Tool for Bond Valuation

2. CDS spread.

See [JP Morgan] Par Credit Default Swap Spread Approximation from Default Probabilities See [Lehman Brothers] Valuation of Credit Default Swaps

I will explain these two methods in detail in the following week.

Gotta play tennis. Have a good day!

There is difference between geek and nerd: If you are a nerd, you will try to figure out all the answers before you start to do something. If you are a geek like me, you will learn what you need to and then try to solve the problems.

Pardon me. I’m not a native English speaker. Sometime I wrote in very funny Chinese English. But I think you are smart enough to figure out what I said.

I’m not a heavy blogger. So I don’t think I have too much time to blog very often in my spare time. This website is only served for showing off my hobby and how smart I am. 🙂

I will only blog the following things:

A. Keep track of my personal quantitative investment research project. The project started when I was a graduate student. But not util now I have time and resources to implement it. Some fancy equipment had been purchased and also I started to design and code.

B. Something I found interesting in cyber world or real life. I have a diverse education background: computer science and financial engineering. I’m particularly interested in physics and statistics. So English is not a barrier to me because I will write down more formulas than words.